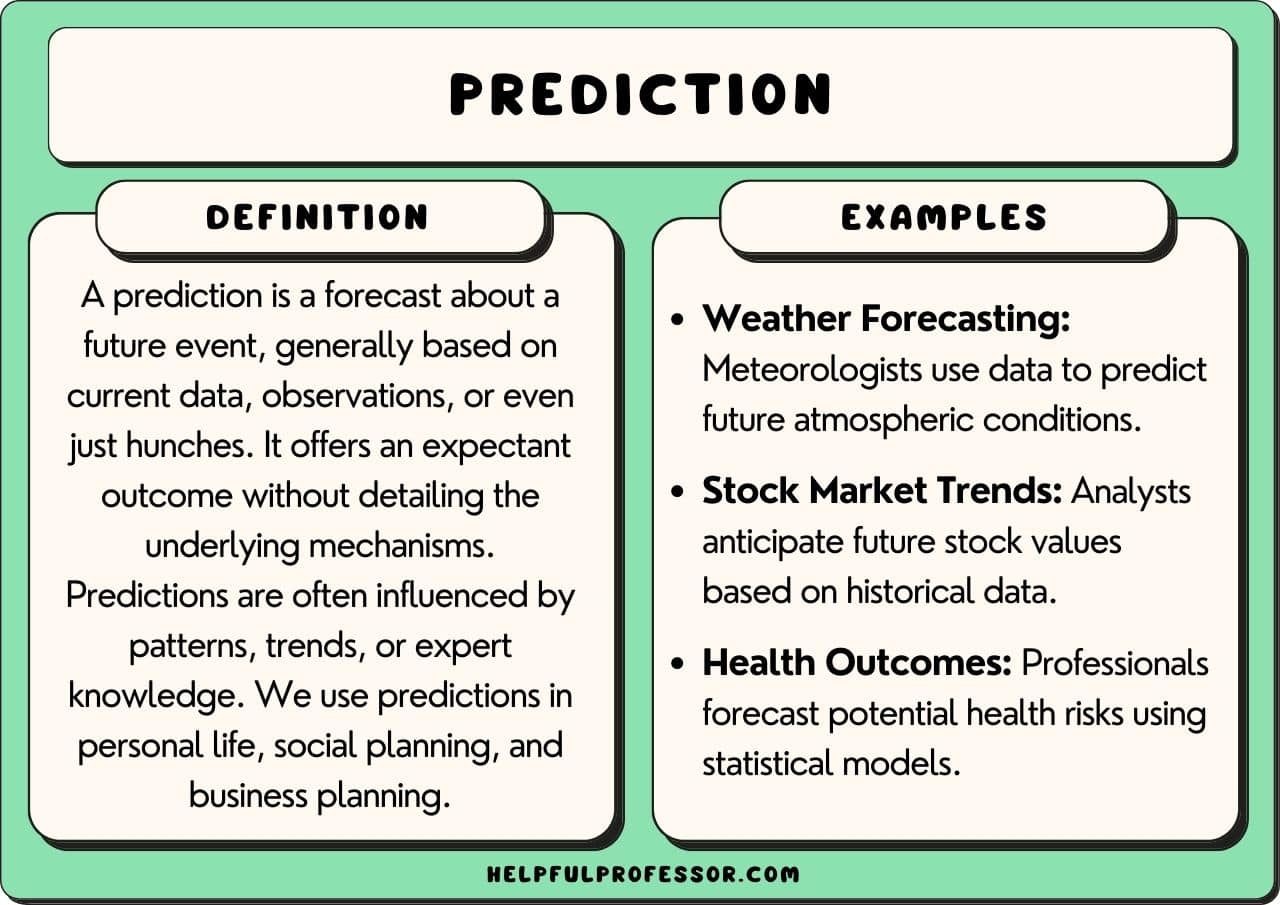

Understanding how to predict engagement rates using AI has become essential for modern content creators and marketers seeking to optimize their social media performance. By leveraging advanced artificial intelligence techniques, businesses can gain valuable insights into audience interactions and tailor their strategies accordingly. This approach not only enhances content relevance but also maximizes reach and impact across various platforms.

In this comprehensive overview, we explore the fundamental concepts of AI-driven engagement prediction, including data collection, feature analysis, machine learning models, and practical applications. By examining these areas, readers will understand how to harness AI tools effectively to forecast engagement metrics such as likes, comments, shares, and views, driving more informed decision-making in digital marketing campaigns.

Overview of AI in Predicting Engagement Rates

In the rapidly evolving landscape of social media marketing, leveraging artificial intelligence (AI) to forecast engagement rates has become a vital tool for content creators and marketers alike. AI-driven predictive models enable a more precise understanding of how audiences interact with content, facilitating strategic decision-making that maximizes reach and impact.

Accurate engagement prediction offers numerous advantages, including optimized content scheduling, targeted audience targeting, and effective allocation of marketing resources. By harnessing AI, organizations can anticipate user interactions such as likes, comments, shares, and views, thereby refining their overall content strategy to foster higher engagement and brand loyalty.

Different Types of Engagement and AI Analysis

Engagement metrics serve as key indicators of content performance on social media platforms. These metrics include likes, comments, shares, and views — each representing distinct forms of audience interaction. AI models analyze these metrics through advanced algorithms that process vast amounts of historical data to identify patterns and predict future engagement levels.

Understanding the nuances of each engagement type is crucial for effective prediction:

- Likes: Indicate initial approval or interest. AI models assess factors like content type, timing, and audience demographics to forecast potential likes.

- Comments: Reflect deeper engagement and audience sentiment. Natural language processing (NLP) techniques help AI analyze comment content to gauge emotional response and likelihood of future interactions.

- Shares: Signify content value and virality. AI algorithms evaluate share frequency in relation to content themes and user networks, predicting the potential for content to spread organically.

- Views: Critical for video content, views quantify overall reach. AI models consider factors such as thumbnail appeal, video duration, and previous viewership patterns to estimate future view counts.

By integrating these diverse engagement signals, AI models construct comprehensive predictive frameworks. For example, a machine learning system trained on historical engagement data from a specific industry can forecast how a new campaign will perform, enabling marketers to tailor content features accordingly. This predictive capability ensures that content strategies remain agile, data-informed, and aligned with audience preferences.

Data Collection and Preparation

Effective prediction of social media engagement rates using AI hinges on the quality and comprehensiveness of the data collected. Gathering relevant interaction data and preparing it meticulously are crucial steps that directly influence the accuracy and reliability of the predictive models. This process involves sourcing diverse datasets, cleaning them to eliminate inconsistencies, and organizing the information into structured formats suitable for machine learning algorithms.

High-quality data collection ensures that the AI models are trained on representative and detailed interaction metrics, capturing the nuances of audience behavior across different platforms. Proper data preparation, including cleaning and handling missing entries, enhances model performance by reducing noise and biases. As social media platforms generate vast amounts of data, employing systematic approaches to gather and preprocess this information is vital for insightful engagement predictions.

Methods for Gathering Social Media Interaction Data

Collecting relevant social media interaction data involves utilizing a combination of APIs, web scraping tools, and third-party data aggregators. APIs provided by platforms such as Facebook Graph API, Twitter API, and Instagram Graph API allow access to structured data on posts, likes, comments, shares, and other engagement metrics. These APIs typically require authentication and adherence to platform policies, but they offer reliable and comprehensive data streams for analysis.

In addition, web scraping techniques can be employed where APIs are limited or unavailable. These methods involve extracting data directly from social media web pages using tools like BeautifulSoup or Selenium, while ensuring compliance with the platforms’ terms of service. Third-party providers like Socialbakers or Brandwatch gather aggregated social media data, offering ready-to-analyze datasets that can accelerate the collection process.

Data should be collected over a consistent period to account for temporal variations in engagement, such as seasonal trends or campaign effects. Including diverse content types and multiple platforms provides a holistic view of interaction patterns, which improves the robustness of AI predictions.

Sample Data Structure for Engagement Prediction

Organizing data into a structured table facilitates efficient analysis and model training. Here is an example of a typical dataset layout:

| Platform | Post Content | Engagement Metrics | Time Posted |

|---|---|---|---|

| Image of a new product launch with a promotional caption | Likes: 1,200; Comments: 150; Shares: 30 | 2023-10-01 10:00:00 | |

| Announcement about upcoming webinar with registration link | Retweets: 300; Likes: 500; Replies: 75 | 2023-10-02 14:30:00 | |

| Behind-the-scenes video of event setup | Reactions: 850; Comments: 90; Shares: 40 | 2023-10-03 09:15:00 |

This structure captures essential aspects, enabling AI models to identify correlations between content types, posting times, and engagement outcomes.

Handling Missing and Inconsistent Data Entries

Data completeness and consistency are critical for reliable AI predictions. Missing or inconsistent data entries can introduce biases and reduce model accuracy if not properly addressed. Several techniques are employed to manage these issues effectively:

- Imputation: Filling in missing values using statistical methods such as mean, median, mode, or more sophisticated approaches like k-nearest neighbors or regression imputation. For example, if engagement data for a post is missing, estimating it based on similar posts within the same platform and content category can be effective.

- Removing Incomplete Records: When data is substantially incomplete or unreliable, it may be preferable to exclude such entries to prevent skewing the model. This approach is suitable when the dataset remains sufficiently large after removal.

- Standardization and Validation: Ensuring data consistency across different platforms and time periods through normalization techniques. Validation checks can identify outliers or inconsistent entries, such as an engagement spike that is likely due to data entry errors.

- Consistent Data Formats: Standardizing date formats, text encodings, and numerical units ensures uniformity. For example, converting all timestamps to UTC and encoding text data in UTF-8 reduces discrepancies and facilitates seamless analysis.

Implementing these procedures enhances data quality, which in turn improves the predictive capabilities of AI models. Regular audits and updates of datasets are recommended to maintain the accuracy of engagement rate predictions over time.

Machine Learning Models for Engagement Prediction

When implementing AI-driven engagement forecasting, selecting the appropriate machine learning model is crucial for achieving accurate and reliable predictions. Different algorithms excel in various data contexts, and understanding their strengths and limitations helps in making informed choices.

In this section, we compare several commonly used machine learning algorithms suitable for engagement rate prediction, Artikel procedures for selecting the most effective model based on data characteristics, illustrate typical training workflows, and provide sample implementation pseudocode to facilitate practical application.

Comparison of Machine Learning Algorithms for Engagement Forecasting

Choosing the right algorithm depends on factors such as data size, feature complexity, and the nature of engagement metrics. Below are some widely adopted machine learning models:

| Algorithm | Type | Strengths | Limitations |

|---|---|---|---|

| Linear Regression | Regression | Simple, interpretable, effective with linear relationships | Performs poorly with non-linear data, sensitive to outliers |

| Decision Trees | Classification & Regression | Handles non-linear data, easy to interpret, requires minimal data preprocessing | Prone to overfitting, especially with deep trees |

| Random Forest | Ensemble of Decision Trees | High accuracy, robust to overfitting, handles high-dimensional data well | Less interpretable than single trees, computationally intensive |

| Gradient Boosting Machines (GBMs) | Ensemble learning | Excellent predictive performance, handles various data types | Long training times, sensitive to hyperparameter tuning |

| Neural Networks | Deep Learning | Captures complex, non-linear patterns, adaptable to large datasets | Requires substantial data and computational resources, less transparent |

Procedures for Model Selection Based on Data Characteristics

Effective model selection involves assessing the dataset’s specific features and aligning them with algorithm capabilities:

- Data Size: For small datasets, simpler models like linear regression or shallow decision trees are preferable. Larger datasets benefit from complex models like neural networks or ensemble methods.

- Feature Complexity: If engagement determinants are highly non-linear or involve intricate interactions, models like gradient boosting or neural networks are more suitable.

- Data Quality: Noisy datasets may require models with regularization capabilities, such as random forests or gradient boosting, to prevent overfitting.

- Interpretability: When understanding feature impacts is important, decision trees or linear models offer more transparency.

- Computational Resources: Consider available processing power; neural networks and ensemble methods demand more resources and longer training times.

Model Training Workflow and Illustration

Training a machine learning model for engagement prediction typically follows a systematic workflow. The process can be visualized through a flowchart illustrating key steps:

Workflow Overview:

- Data Collection and Preprocessing: Clean, normalize, and encode features.

- Feature Selection: Identify the most influential variables for engagement prediction.

- Model Initialization: Choose an initial algorithm based on data insights.

- Training: Fit the model to training data, optimizing parameters.

- Validation: Evaluate performance on validation data, fine-tune hyperparameters.

- Testing: Assess model accuracy with unseen test data.

- Deployment: Use the trained model for real-time or batch engagement forecasting.

Below is a simplified pseudocode demonstrating the training process for a regression model, such as linear regression or decision trees:

# Load dataset

X_train, y_train = load_training_data()

X_test, y_test = load_test_data()

# Initialize model (example: decision tree)

model = DecisionTreeRegressor(max_depth=5)

# Train model

model.fit(X_train, y_train)

# Make predictions

predictions = model.predict(X_test)

# Evaluate performance

accuracy = evaluate_model(y_test, predictions)

print("Model Performance:", accuracy)

This pseudocode highlights key steps: data loading, model initialization, training, prediction, and evaluation. For more complex models like neural networks, frameworks such as TensorFlow or PyTorch implement similar workflows with additional steps for architecture design and hyperparameter tuning.

Model Evaluation and Optimization

Effective evaluation and optimization of AI models are essential steps in ensuring accurate and reliable predictions of engagement rates. These processes help identify the strengths and weaknesses of different models, guide hyperparameter tuning, and prevent overfitting. By systematically assessing model performance, practitioners can select the most suitable algorithms and configurations for their specific datasets and objectives.

Evaluation metrics provide quantitative measures of a model’s predictive accuracy and generalization ability. Optimization techniques such as hyperparameter tuning aim to enhance model performance by refining key parameters while avoiding overfitting. Cross-validation procedures further strengthen model robustness by testing performance across multiple data subsets, ensuring that the model’s predictions are consistent and reliable when applied to unseen data.

Assessment Metrics for Prediction Performance

Understanding the effectiveness of engagement prediction models requires analyzing various metrics. These metrics offer insights into different aspects of predictive accuracy, enabling a comprehensive evaluation of model performance.

- Accuracy: Represents the proportion of correct predictions among all predictions made. It is most informative when classes are balanced, providing a quick snapshot of overall performance.

- Precision: Measures the proportion of true positive predictions among all positive predictions. High precision indicates that when the model predicts high engagement, it is usually correct. This is crucial when false positives are costly, such as overestimating engagement leading to misallocated marketing resources.

- Recall: Also known as sensitivity, it quantifies the proportion of actual positive cases (e.g., genuinely high engagement posts) correctly identified by the model. High recall is essential when missing high-engagement instances can result in lost opportunities.

- F1 Score: The harmonic mean of precision and recall, providing a balanced measure that considers both false positives and false negatives. It is especially useful when seeking a trade-off between precision and recall, often applicable in engagement prediction scenarios with imbalanced classes.

Hyperparameter Tuning and Overfitting Prevention

Optimizing model performance involves adjusting hyperparameters—settings that govern the learning process—such as learning rate, number of trees (in ensemble models), or regularization strength. Proper tuning can significantly improve predictive accuracy while reducing the risk of overfitting, where the model captures noise rather than underlying patterns.

Grid search and random search are common methods for hyperparameter tuning. Grid search exhaustively explores predefined parameter combinations, while random search samples parameter space randomly, often uncovering effective configurations more efficiently.

Regularization techniques, such as L1 and L2 penalties, are employed to constrain model complexity, thus preventing overfitting. Early stopping, where training halts once performance on validation data plateaus, is also effective. Cross-validation during hyperparameter tuning ensures that selected parameters generalize well across different data subsets.

Evaluation Results of Different Models and Parameters

The following table summarizes the evaluation metrics obtained from various models and hyperparameter settings, illustrating how different configurations impact predictive performance.

| Model / Parameter Setting | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Logistic Regression (default) | 0.78 | 0.75 | 0.70 | 0.72 |

| Random Forest (n_estimators=100, max_depth=10) | 0.83 | 0.80 | 0.77 | 0.78 |

| SVM (linear kernel, C=1.0) | 0.81 | 0.78 | 0.73 | 0.75 |

| XGBoost (learning_rate=0.1, n_estimators=150, max_depth=8) | 0.85 | 0.82 | 0.79 | 0.80 |

| Neural Network (2 hidden layers, dropout=0.5) | 0.82 | 0.79 | 0.75 | 0.77 |

Cross-Validation for Robustness

Cross-validation is a crucial process to ensure that model performance is consistent and not dependent on a specific train-test split. K-fold cross-validation involves partitioning the dataset into ‘k’ equal parts, training the model on ‘k-1’ parts, and validating on the remaining part. This process repeats ‘k’ times, with each subset serving as validation once.

Implementing cross-validation reduces the likelihood of overfitting and provides a more reliable estimate of how the model will perform on unseen data. When combined with hyperparameter tuning, it facilitates the selection of optimal model configurations that generalize well across different data segments, ultimately leading to more accurate engagement rate predictions.

Practical Application of AI-Based Engagement Forecasting

Implementing AI-driven engagement predictions within content planning workflows enhances the ability to deliver targeted, relevant, and timely content to audiences. By translating predictive insights into actionable strategies, organizations can optimize their social media presence, improve user interactions, and achieve better return on investment. Seamlessly integrating these predictions into daily operations ensures that content creators and marketers can respond proactively to audience trends and preferences.

This section explores key strategies for embedding AI-based engagement forecasts into content development processes, visualizing predictive data through dashboards, deriving actionable insights to refine posting strategies, and establishing effective reporting templates to facilitate ongoing analysis and decision-making.

Integrating Predictions into Content Planning Workflows

Embedding AI-generated engagement forecasts into existing content workflows enables teams to prioritize content themes, formats, and timing based on predicted performance. This integration involves several key strategies:

- Establishing automated data feeds from AI models into content management systems (CMS) to provide real-time insights.

- Scheduling content based on high-engagement prediction windows, such as optimal posting times identified by the model.

- Aligning content types with predicted audience preferences, like favoring videos over static images if forecasts suggest higher engagement for video content.

- Creating feedback loops where actual performance metrics are fed back into the AI models to continuously improve prediction accuracy.

Visualizing Engagement Predictions Using Charts and Dashboards

Effective visualization of engagement forecasts allows teams to interpret complex data quickly and make informed decisions. Dashboards should encompass key metrics and visual elements such as:

- Line charts illustrating projected engagement trends over time, highlighting peak periods for audience activity.

- Bar charts comparing predicted engagement across different content types or topics, aiding in content prioritization.

- Heatmaps indicating optimal posting times based on forecasted audience responsiveness.

- Interactive filters that enable users to view predictions by platform, audience segment, or content category.

Using visualization tools like Tableau, Power BI, or custom dashboards enhances accessibility and facilitates cross-team collaboration on strategic planning.

Deriving Actionable Insights from AI Predictions

Transforming engagement forecasts into practical strategies involves analyzing predicted data to inform content decisions. Some examples include:

- Adjusting posting schedules to align with predicted high-engagement time slots, such as early mornings or evenings when audience activity peaks.

- Modifying content formats based on predicted preferences, like increasing short-form videos if forecasts indicate higher engagement rates with video content.

- Targeting specific audience segments with personalized content recommendations supported by engagement predictions.

- Testing new content themes or topics that the AI models forecast as promising for higher engagement, thereby innovating content strategies based on data-driven insights.

For instance, if the AI forecast predicts a 25% increase in engagement for user-generated content during weekends, a brand might schedule more UGC campaigns on Saturdays and Sundays to maximize reach and interactions.

Template Structures for Reports and Dashboards

Creating standardized templates ensures consistency and efficiency in reporting engagement predictions and outcomes. An example of an HTML dashboard template includes:

<table border="1" cellpadding="10">

<tr>

<th>Date</th>

<th>Content Type</th>

<th>Predicted Engagement Rate</th>

<th>Actual Engagement Rate</th>

<th>Performance Variance</th>

</tr>

<tr>

<td>2024-04-10</td>

<td>Video</td>

<td>15%</td>

<td>12%</td>

<td>-3%</td>

</tr>

<tr>

<td>2024-04-11</td>

<td>Image</td>

<td>8%</td>

<td>10%</td>

<td>+2%</td>

</tr>

</table>

This template can be extended to include visual charts, trend analyses, and annotations for strategic adjustments.

Regular use of such reports ensures continuous monitoring and improvement of content strategies based on AI-driven insights.

Challenges and Limitations of AI in Engagement Prediction

Predicting engagement rates using artificial intelligence offers numerous advantages, but it also presents inherent challenges and limitations that need careful consideration. Understanding these issues is crucial for developing robust and effective predictive models. This section explores common pitfalls such as data bias, model generalization issues, and the impact of platform changes, along with best practices for maintaining model accuracy over time through continuous updates.

Real-world case studies highlight scenarios where these challenges manifest and offer insights into managing them effectively.

Data Bias and Representation Issues

AI models heavily rely on historical data to make predictions, making the quality and diversity of this data paramount. When training datasets lack diversity or contain inherent biases, the models tend to perpetuate these biases, resulting in skewed engagement predictions. For instance, if a social media platform’s data predominantly features content from a specific demographic or geographic region, the model may inaccurately predict engagement for underrepresented groups or regions.

This leads to unfair or ineffective content recommendations, ultimately impacting user experience and engagement metrics. Ensuring data collection encompasses a broad and inclusive range of user behaviors, demographics, and content types is essential to mitigate bias and improve model fairness.

Model Generalization and Overfitting

A significant challenge in AI-driven engagement prediction is developing models that generalize well across diverse scenarios. Overfitting occurs when a model learns noise or specific patterns in training data too precisely, causing poor performance on new, unseen data. Conversely, underfitting results in a model that is too simplistic to capture underlying patterns, reducing accuracy. For example, a model trained exclusively on data from a particular campaign or time period may not accurately predict future engagement when the campaign shifts or seasonal trends change.

Implementing techniques such as cross-validation, regularization, and maintaining a validation set helps ensure models generalize effectively across different contexts and datasets.

Impact of Platform Changes and External Factors

Social media platforms and content distribution channels frequently undergo algorithm updates, feature modifications, and policy changes, all of which can significantly influence engagement patterns. These external factors can render previously trained models less effective or obsolete. For example, a platform introducing new content prioritization algorithms may alter user interaction behaviors, making earlier engagement predictors inaccurate. Additionally, unforeseen events like global crises or viral trends can drastically shift engagement dynamics.

To address this, AI models must be adaptable, incorporating mechanisms for rapid retraining and adjustment in response to platform updates and external shifts. Staying informed about platform developments and integrating real-time data streams can enhance model resilience.

Best Practices for Continuous Model Updating

Maintaining the accuracy and relevance of AI-based engagement prediction models requires ongoing vigilance and iterative improvement. Regularly updating models with fresh data captures evolving user behaviors, content trends, and platform features. Implementing automated data pipelines ensures continuous collection and preprocessing of new engagement data, facilitating timely retraining. Moreover, establishing monitoring systems for key performance metrics helps identify model degradation early.

When performance drops are detected, prompt retraining with updated datasets allows the model to adapt, preserving prediction accuracy. Incorporating feedback loops where predictions are validated against actual engagement outcomes further refines model performance over time.

Case Studies Illustrating Limitations

Real-world scenarios highlight how limitations can impact engagement prediction efforts. In one instance, a major social media platform experienced a sudden decline in model performance following an algorithm overhaul that prioritized different content types. The pre-existing models, trained on older engagement patterns, failed to accurately forecast user interactions with new content formats. The platform responded by retraining models with data reflecting the new algorithmic landscape, emphasizing the importance of continual updates.

Another example involves a marketing campaign that relied on AI predictions to target specific audience segments. Due to biased training data favoring certain demographics, the engagement predictions were skewed, resulting in ineffective ad placements and diminished ROI. These cases underscore the necessity of diverse data collection and ongoing model evaluation to navigate limitations inherent in AI-driven engagement forecasting.

Last Point

In conclusion, predicting engagement rates using AI offers immense opportunities for refining content strategies and improving audience interaction. While challenges like data bias and platform changes exist, ongoing model optimization and data updates can mitigate these issues. Embracing AI-driven predictions enables creators to make smarter, data-informed decisions that foster stronger connections with their audiences and achieve higher engagement outcomes.